Miquel Perello Nieto

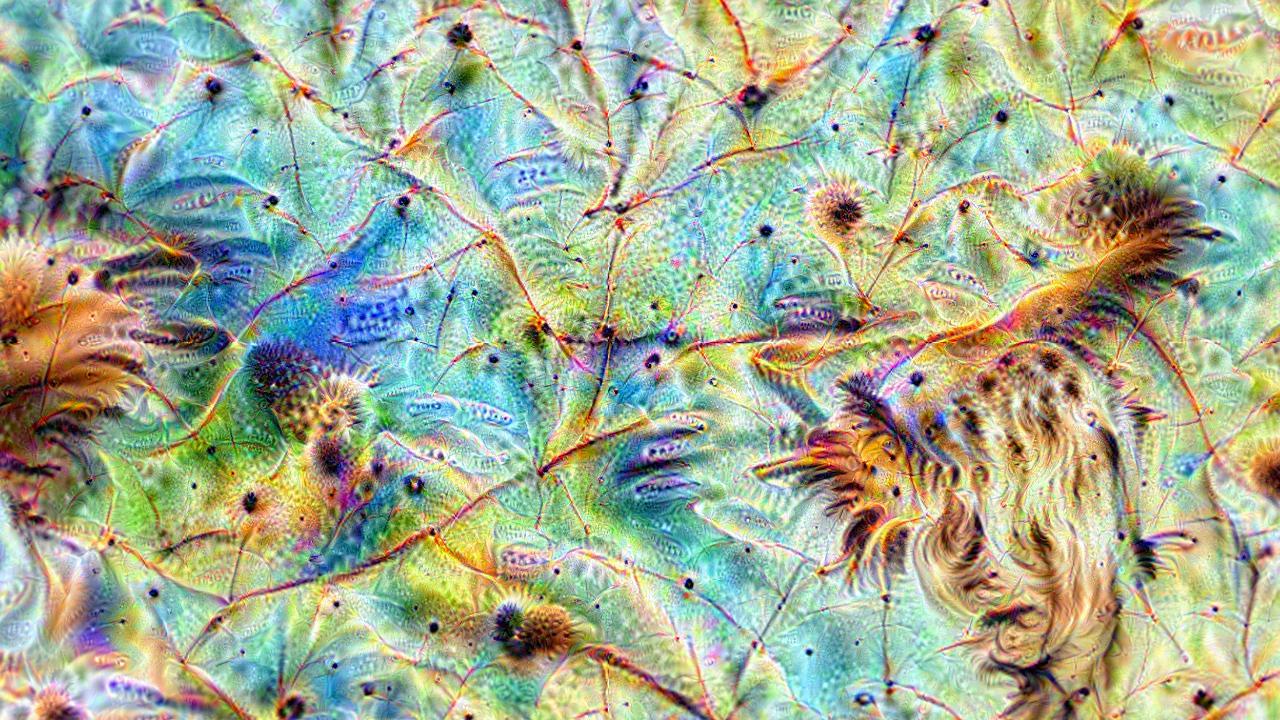

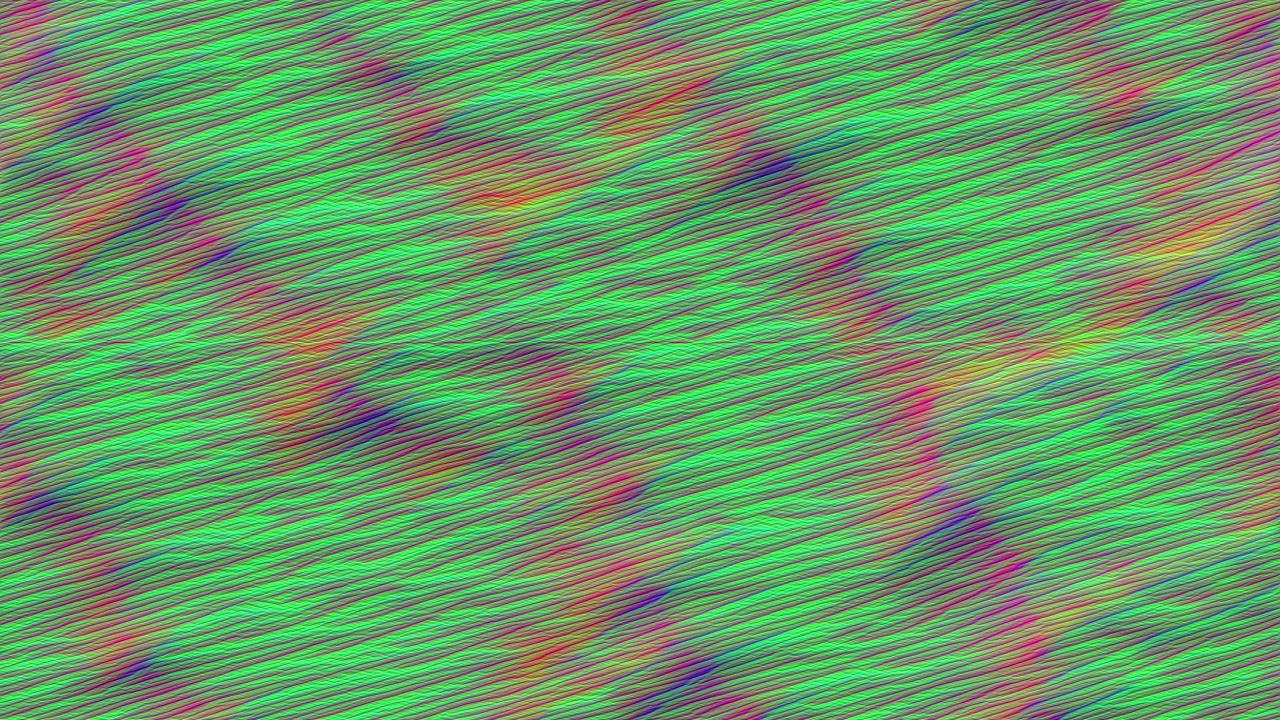

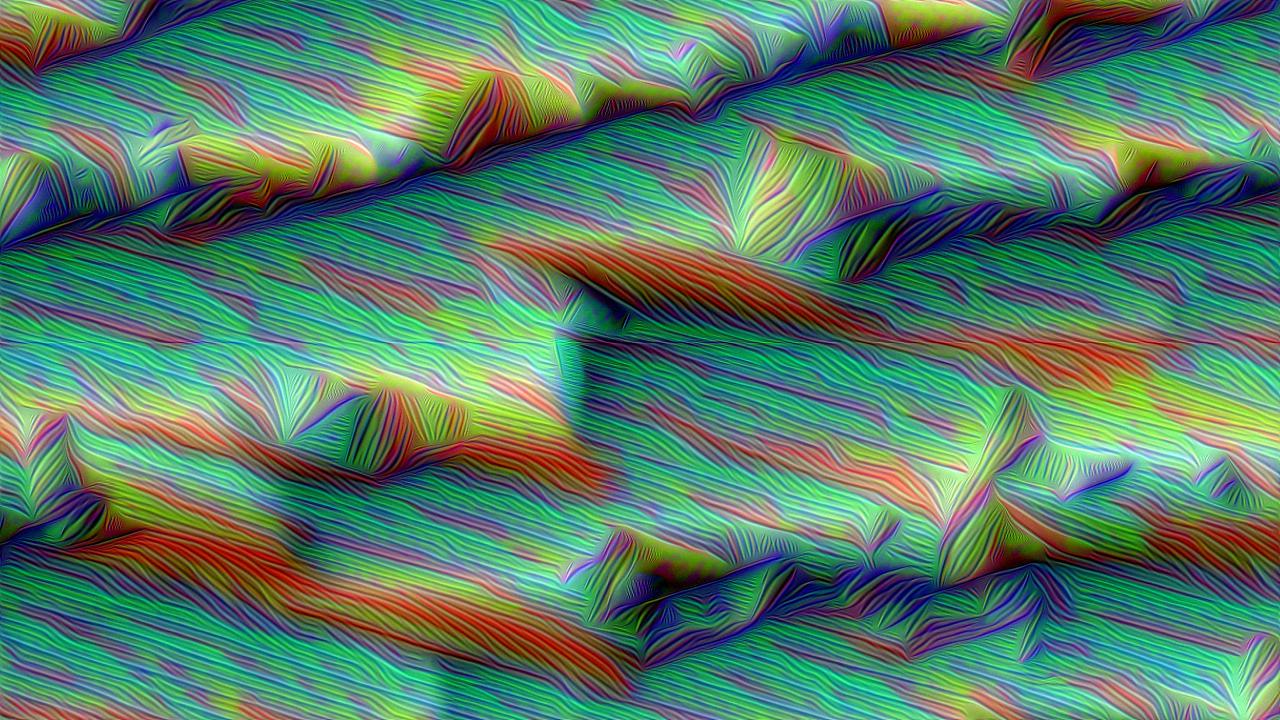

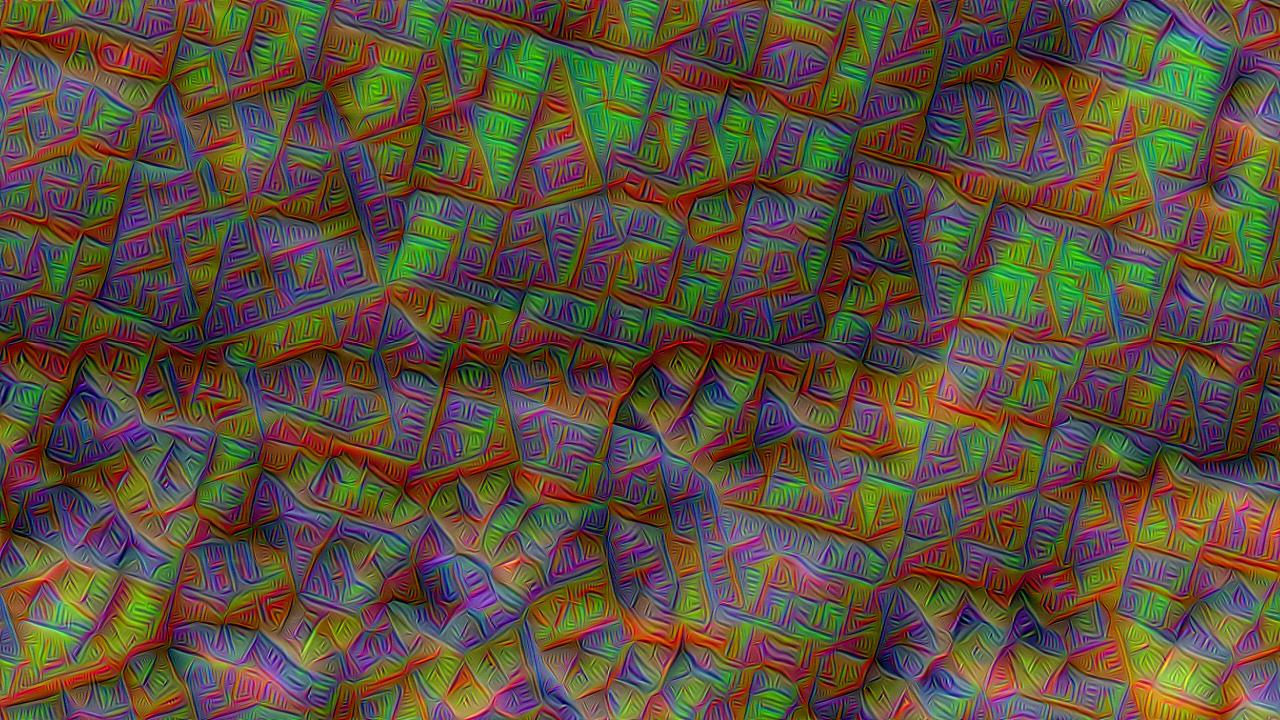

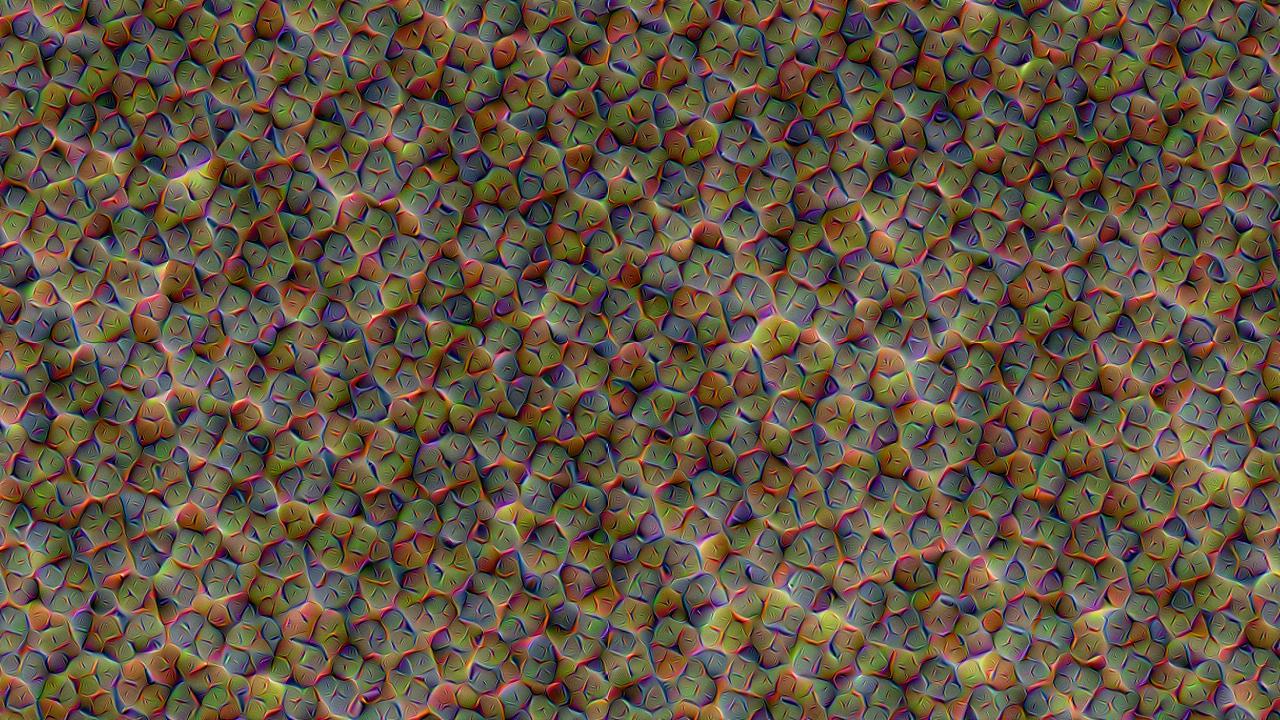

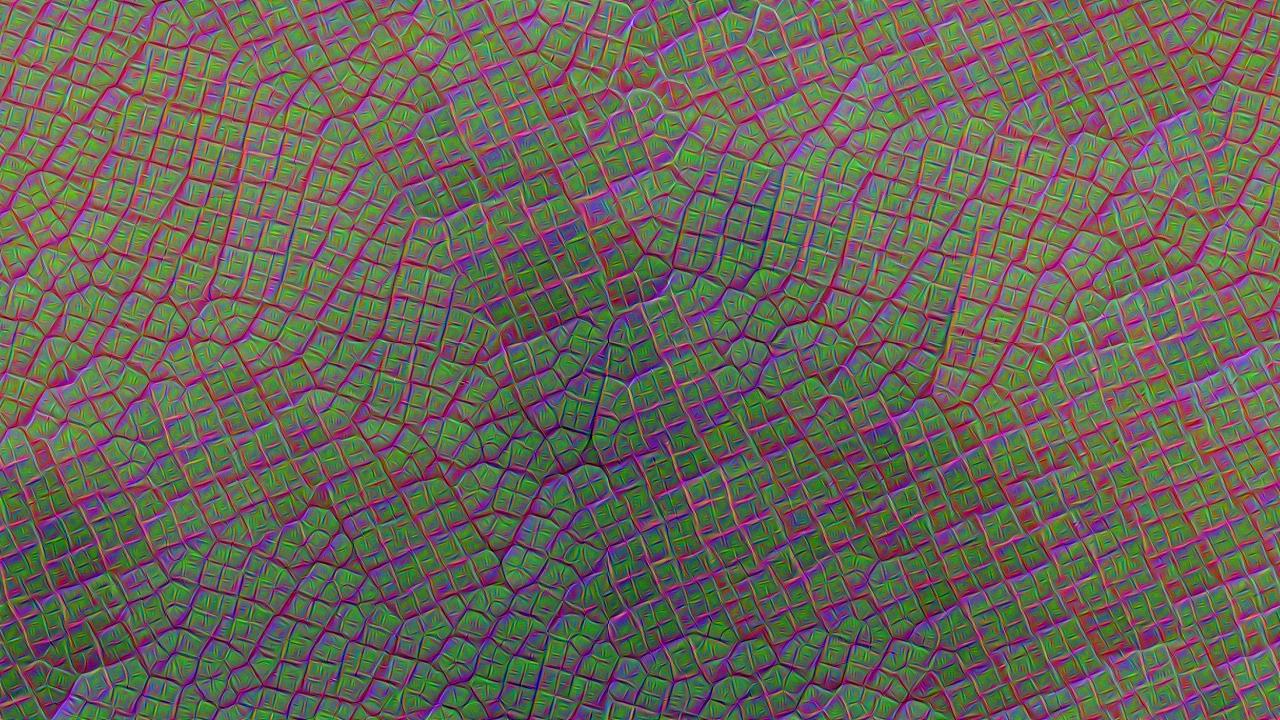

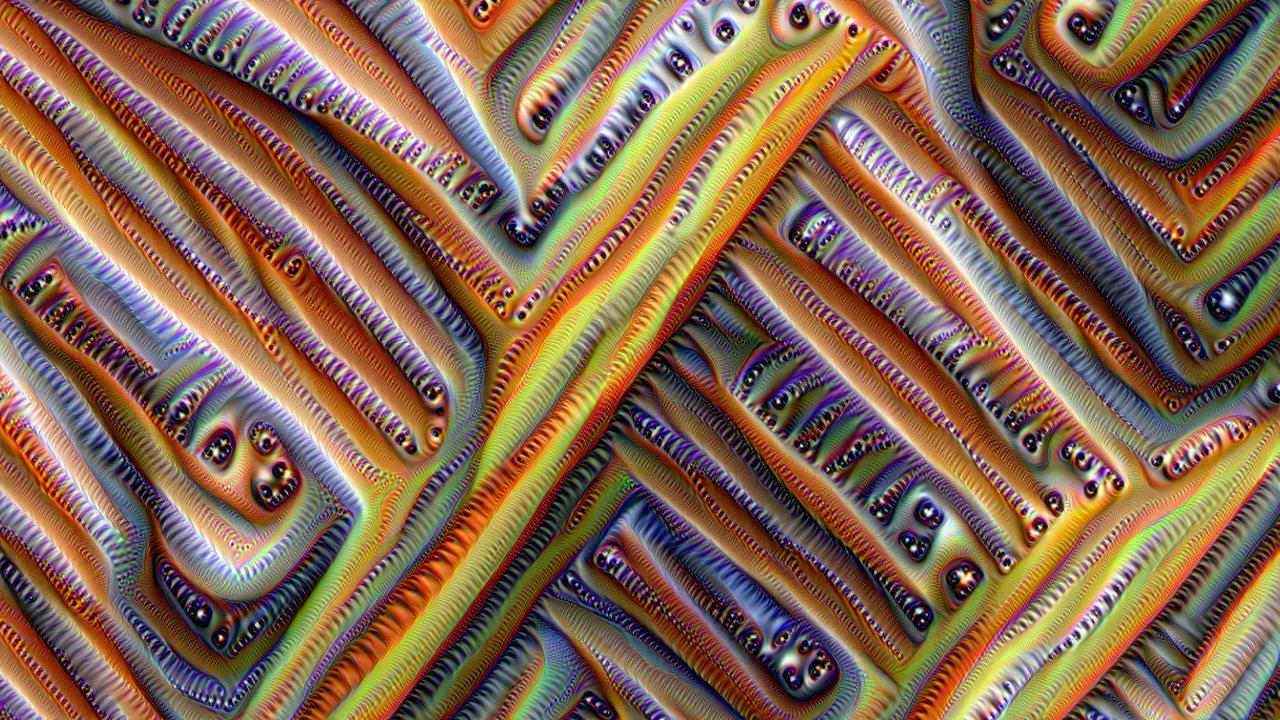

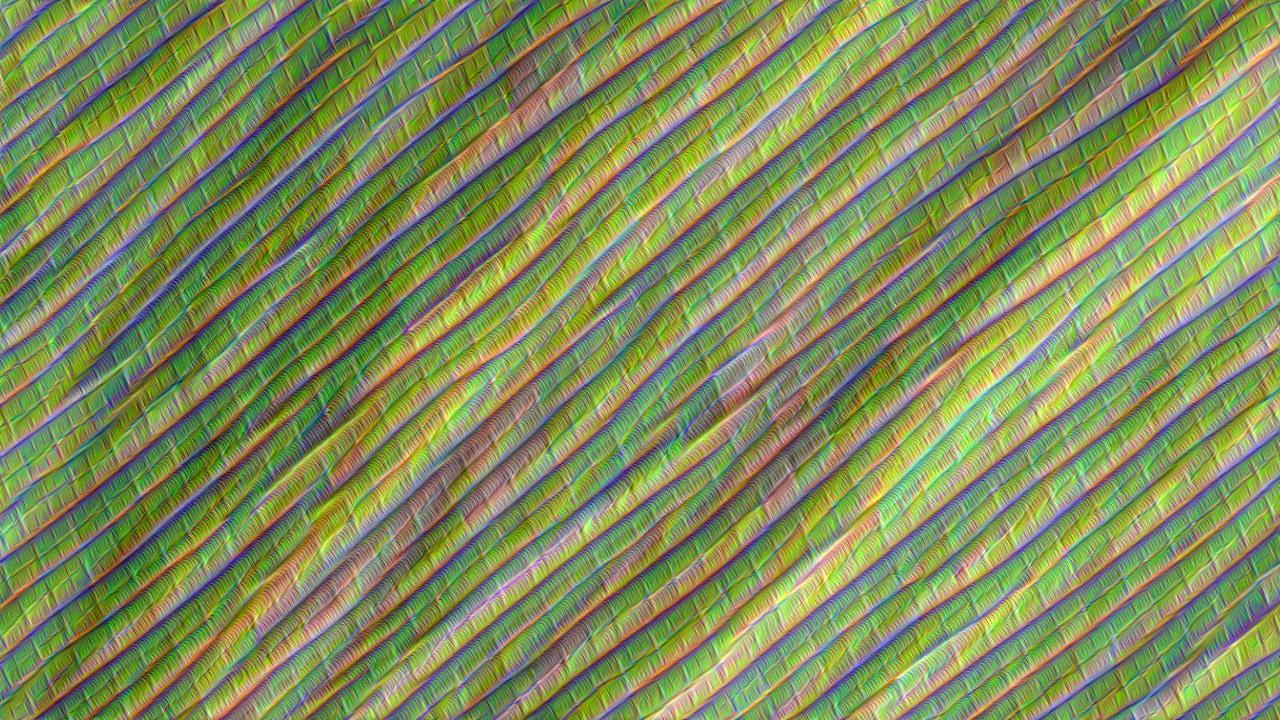

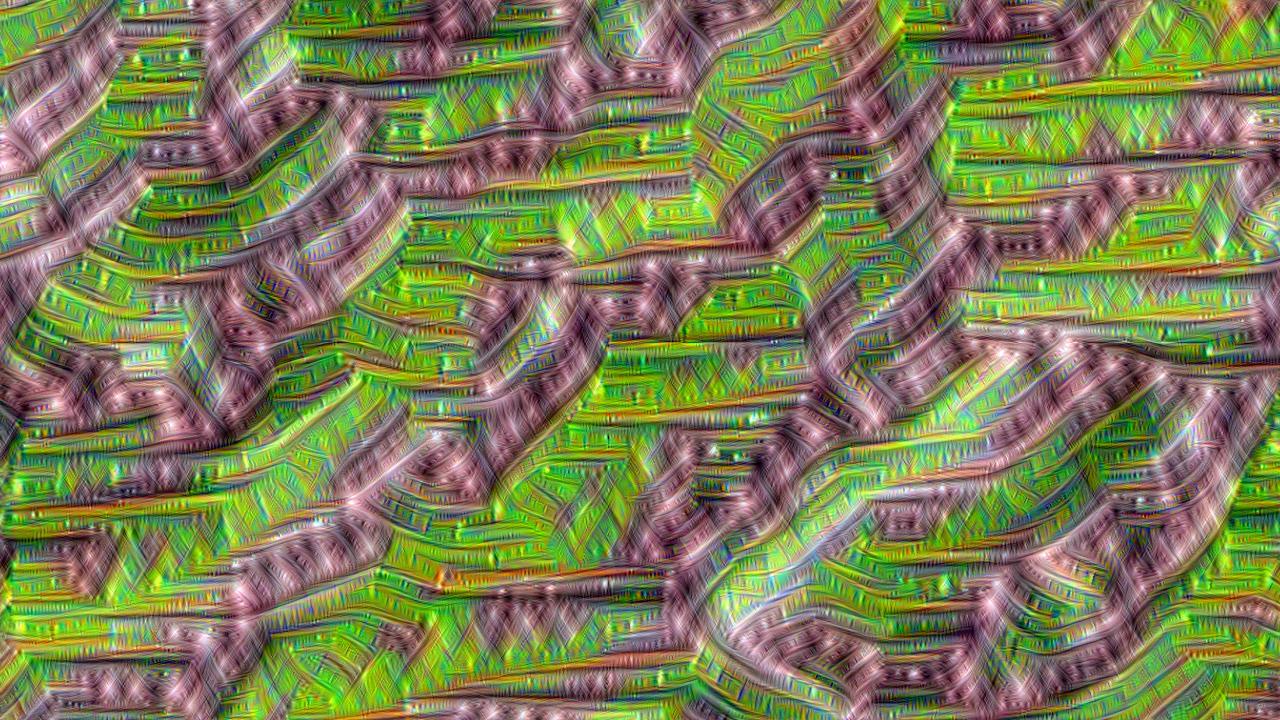

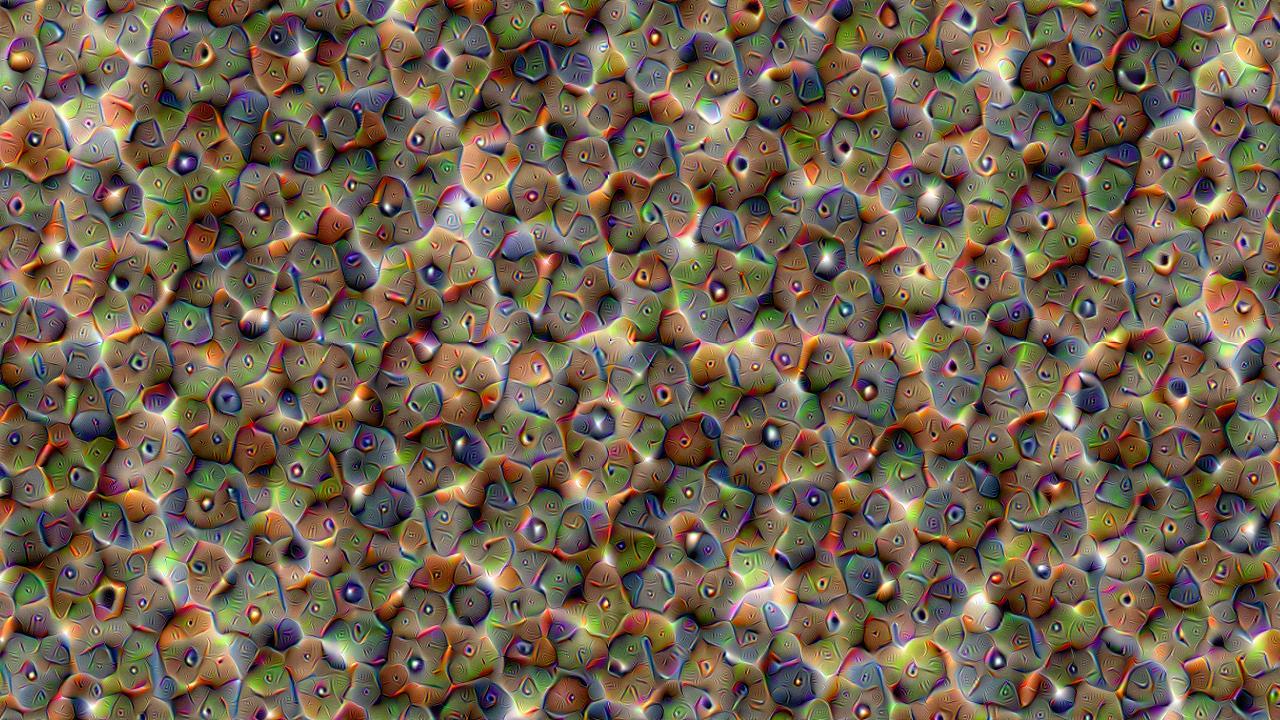

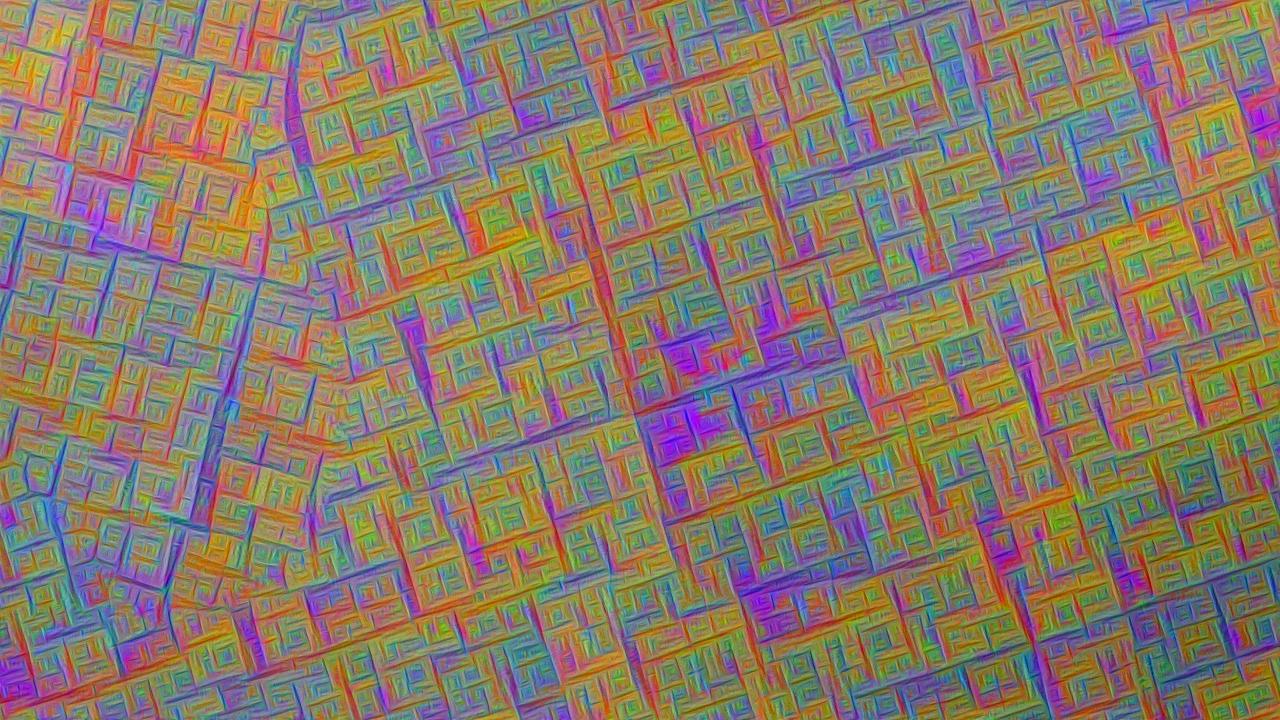

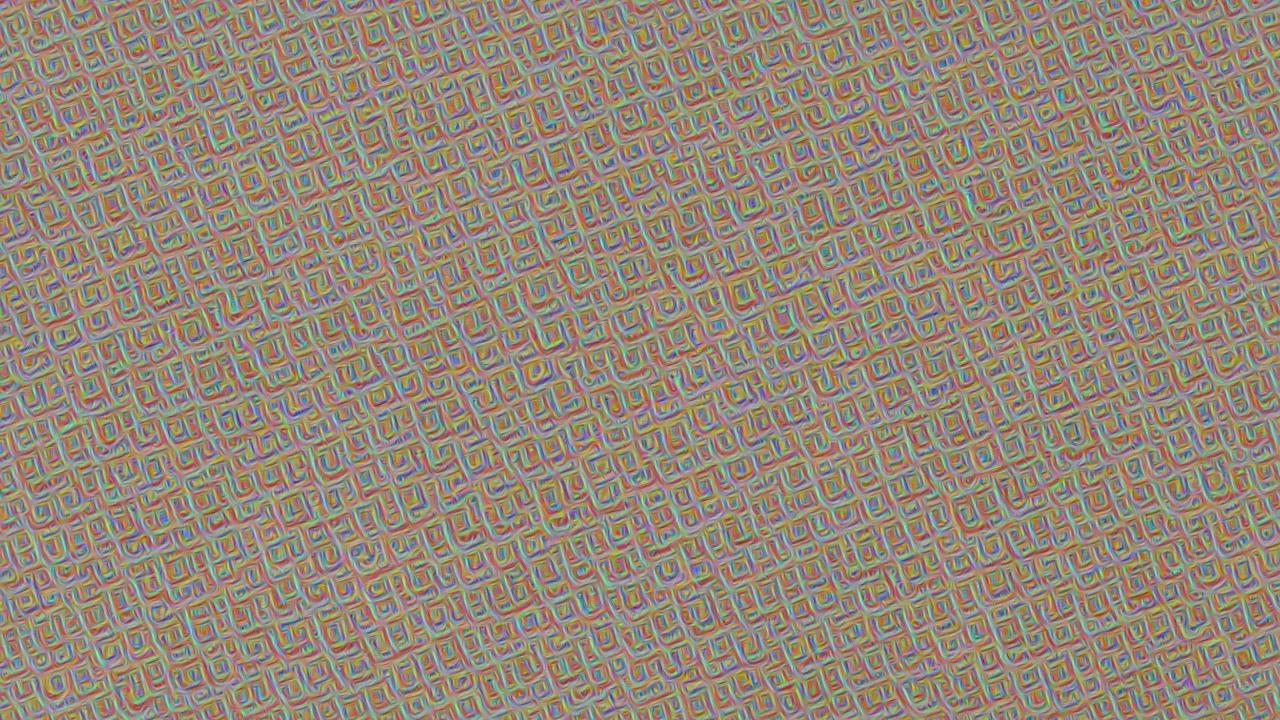

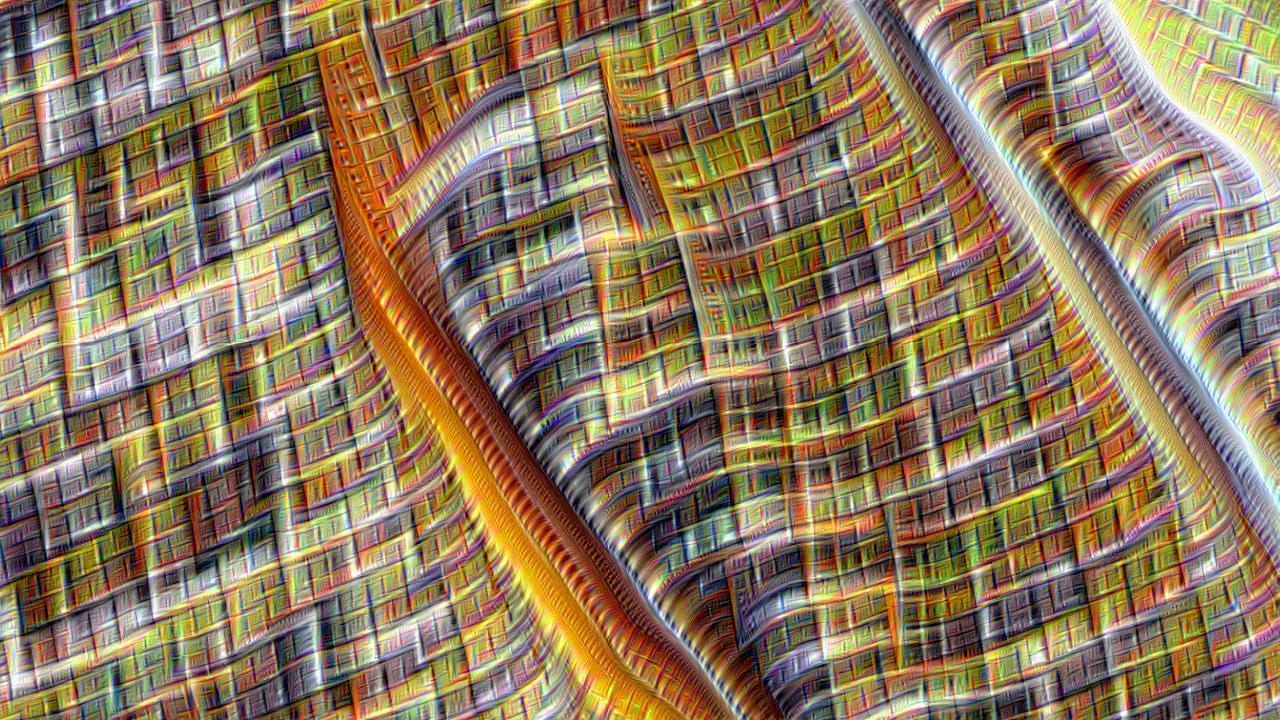

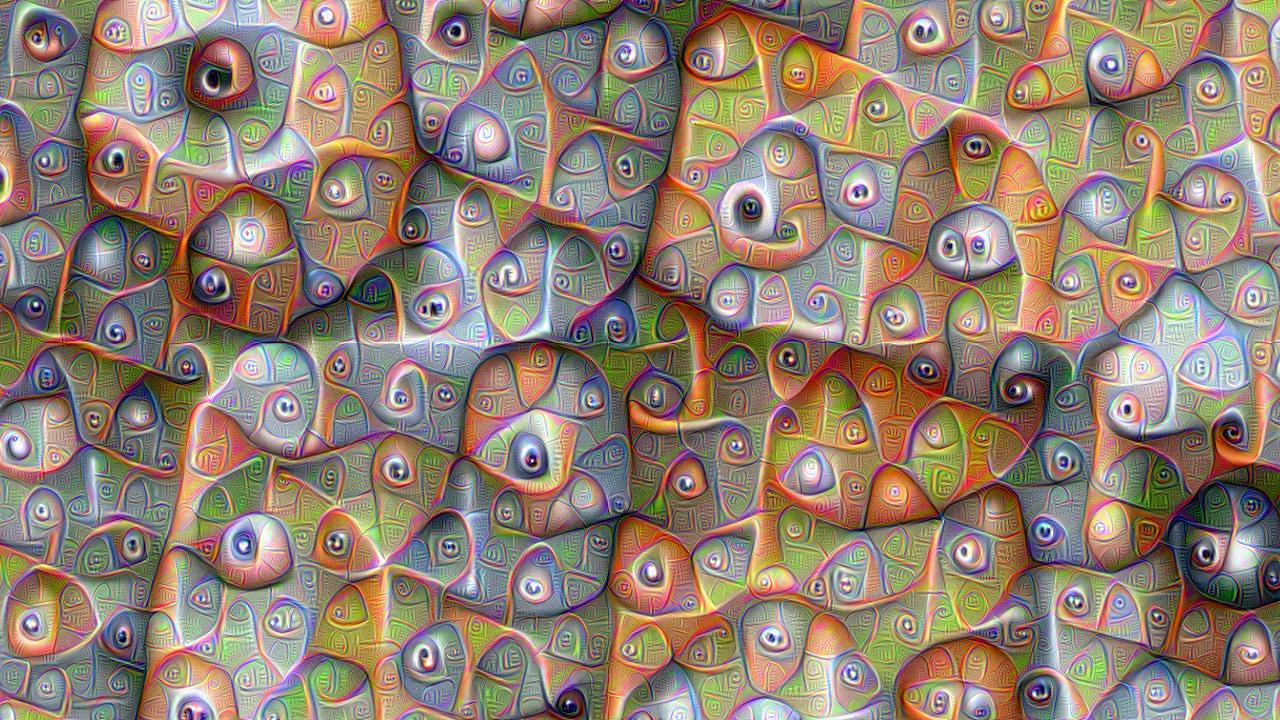

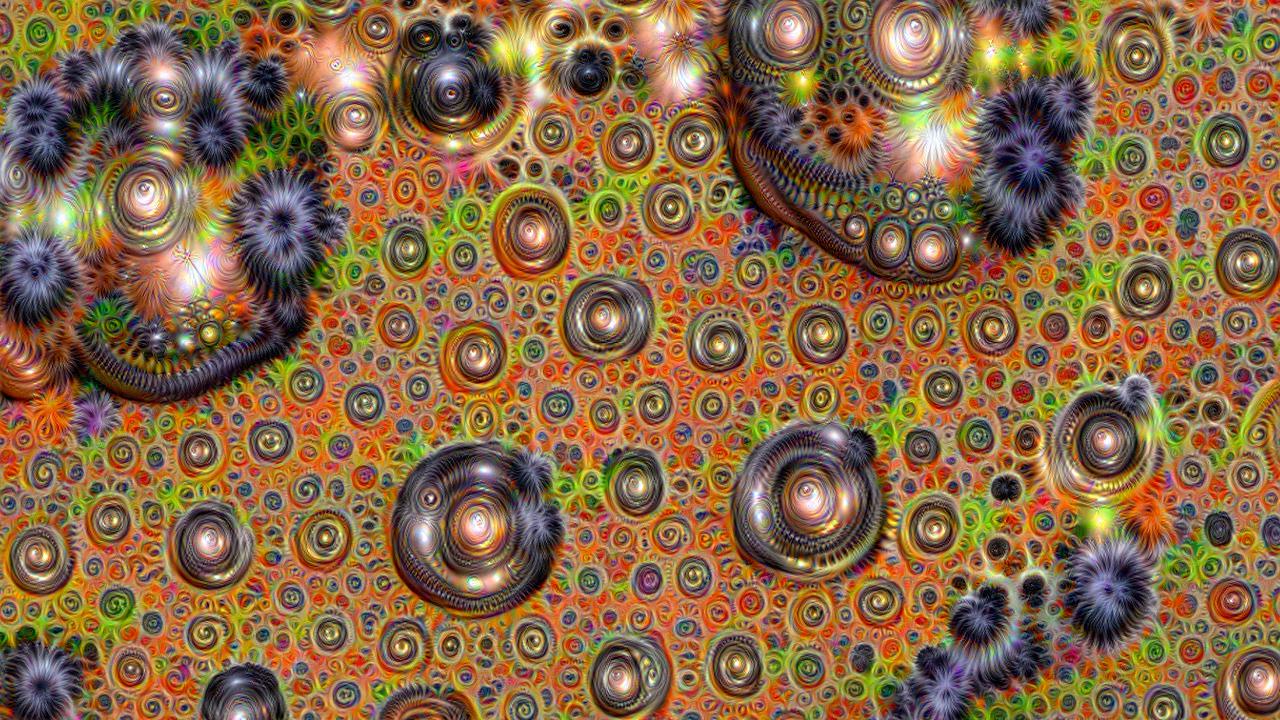

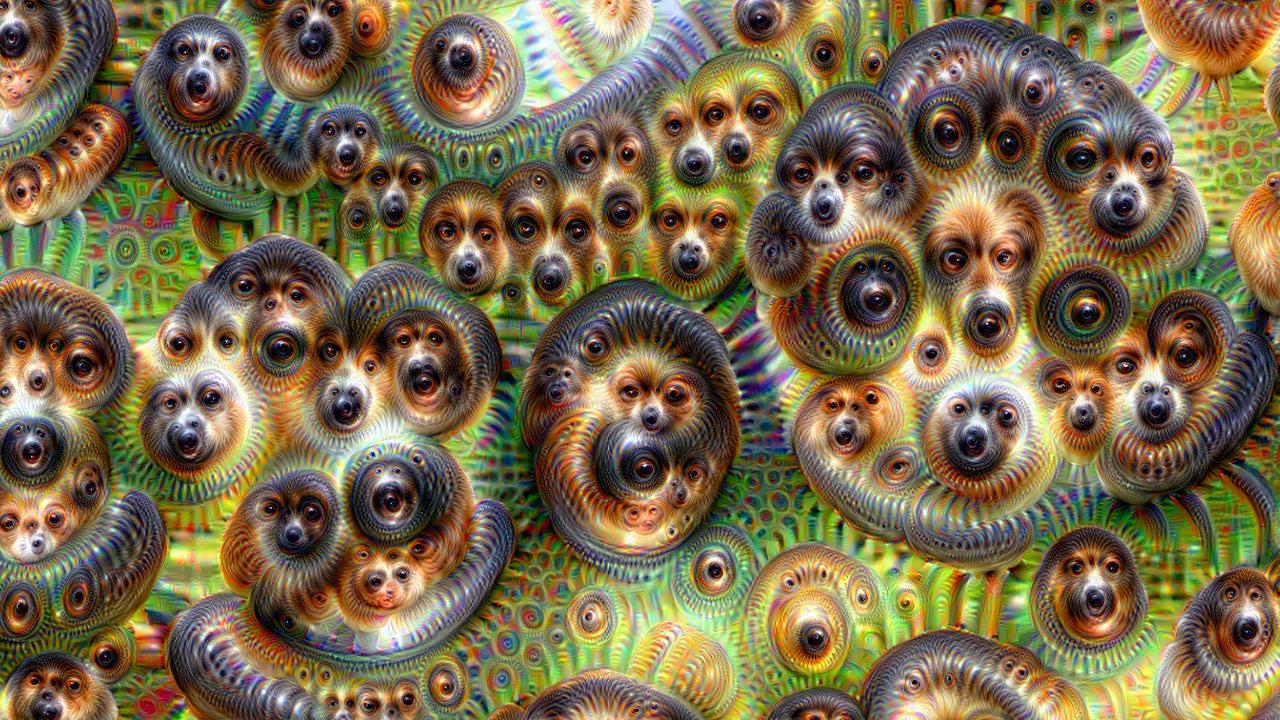

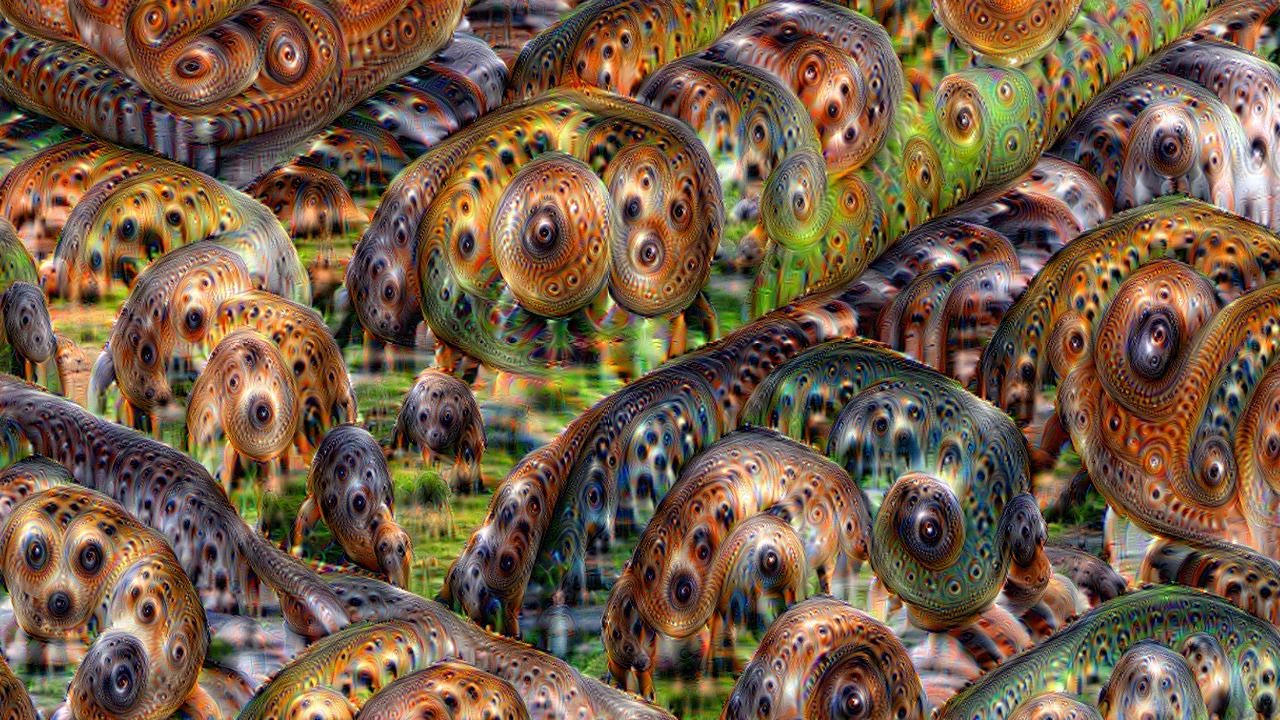

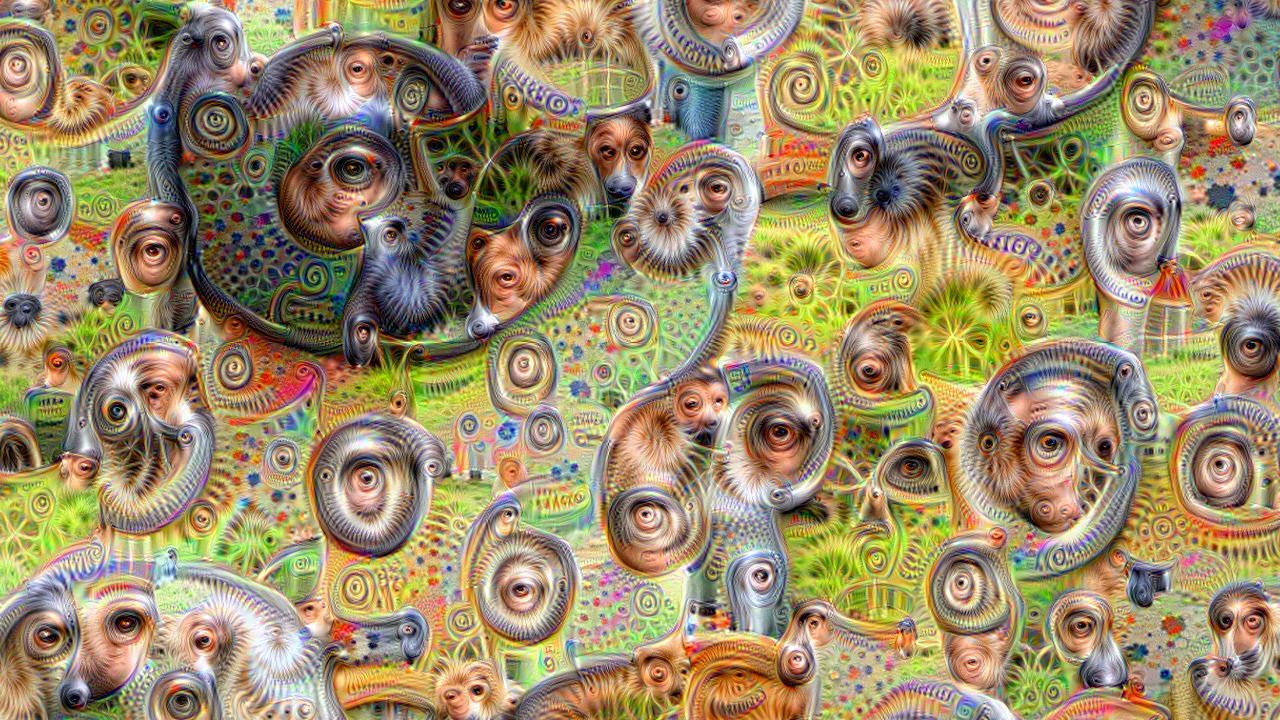

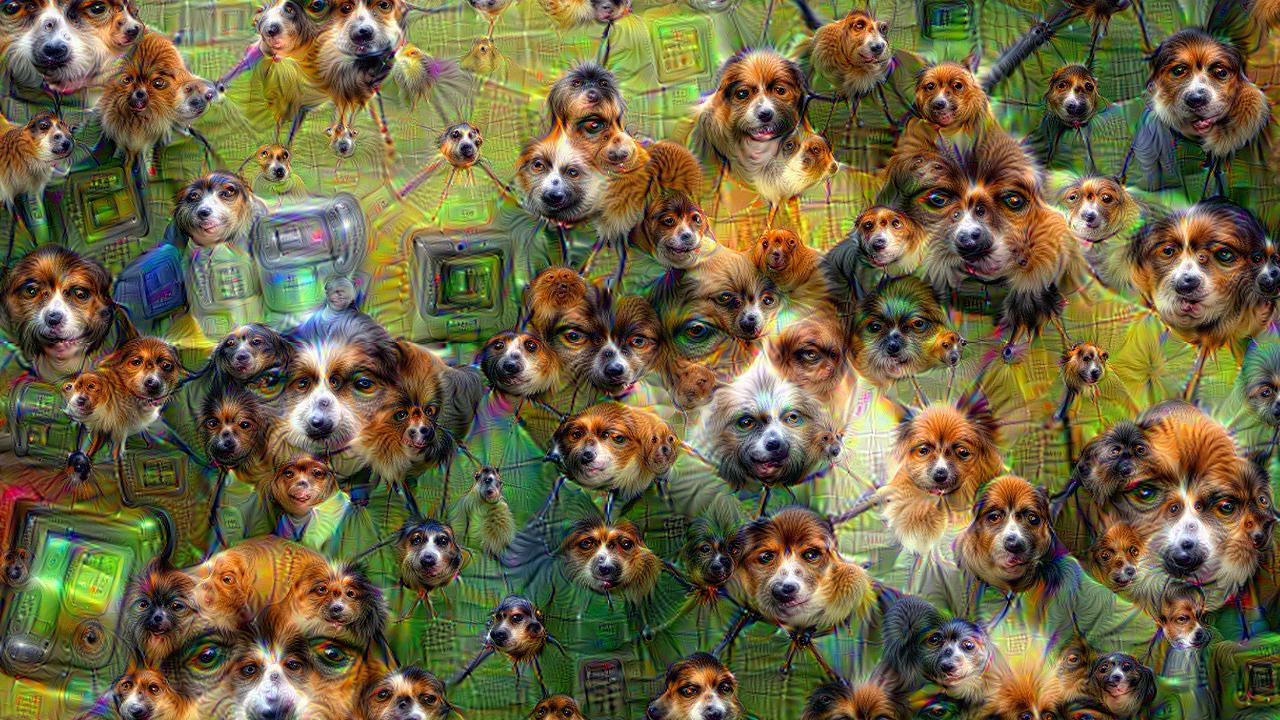

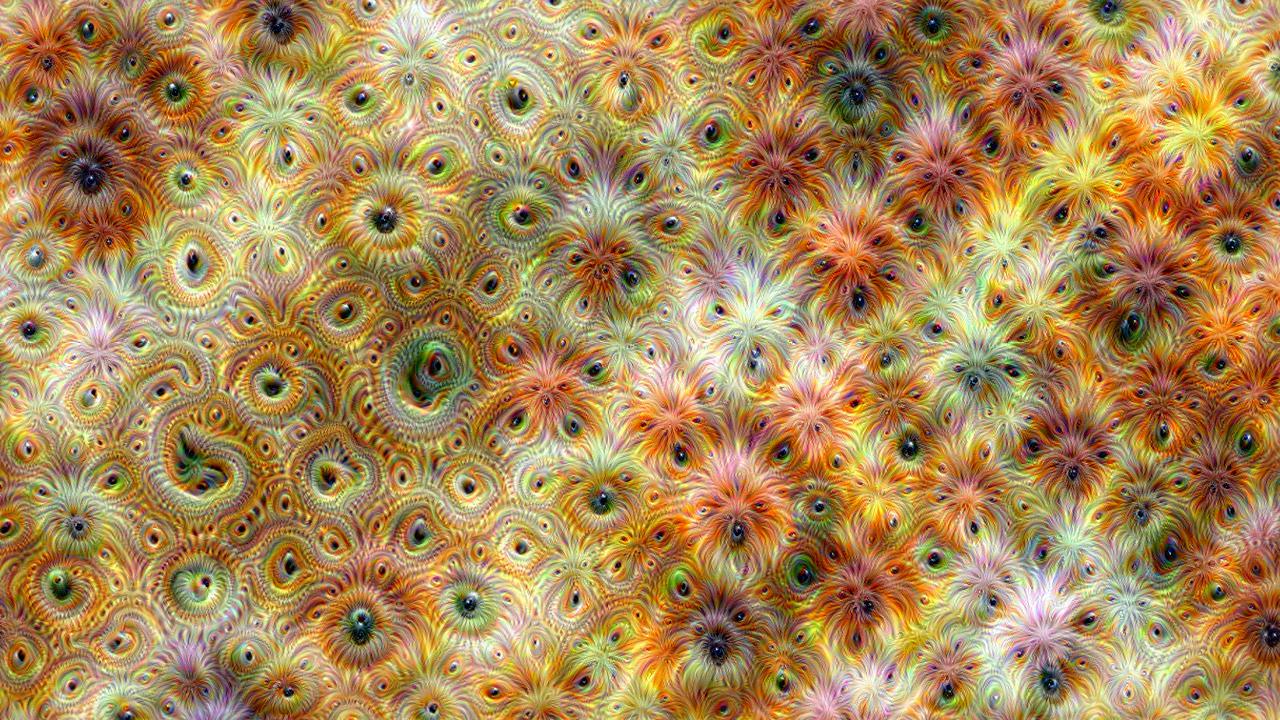

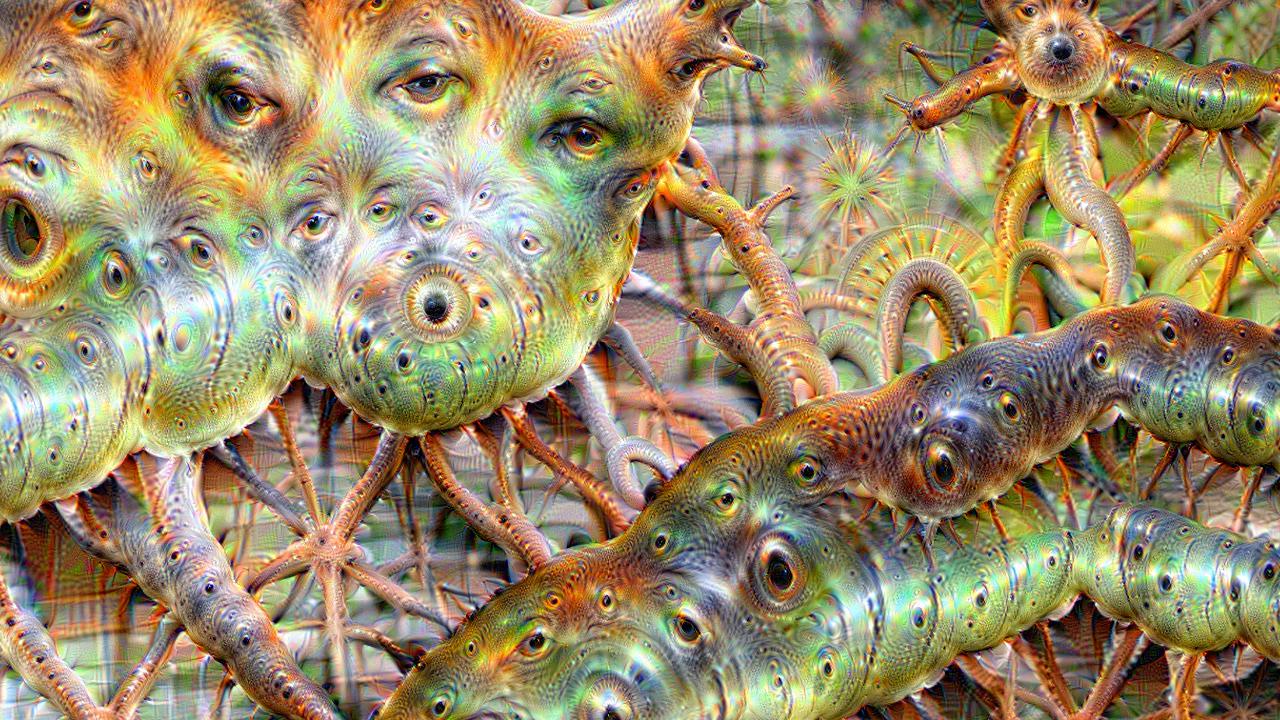

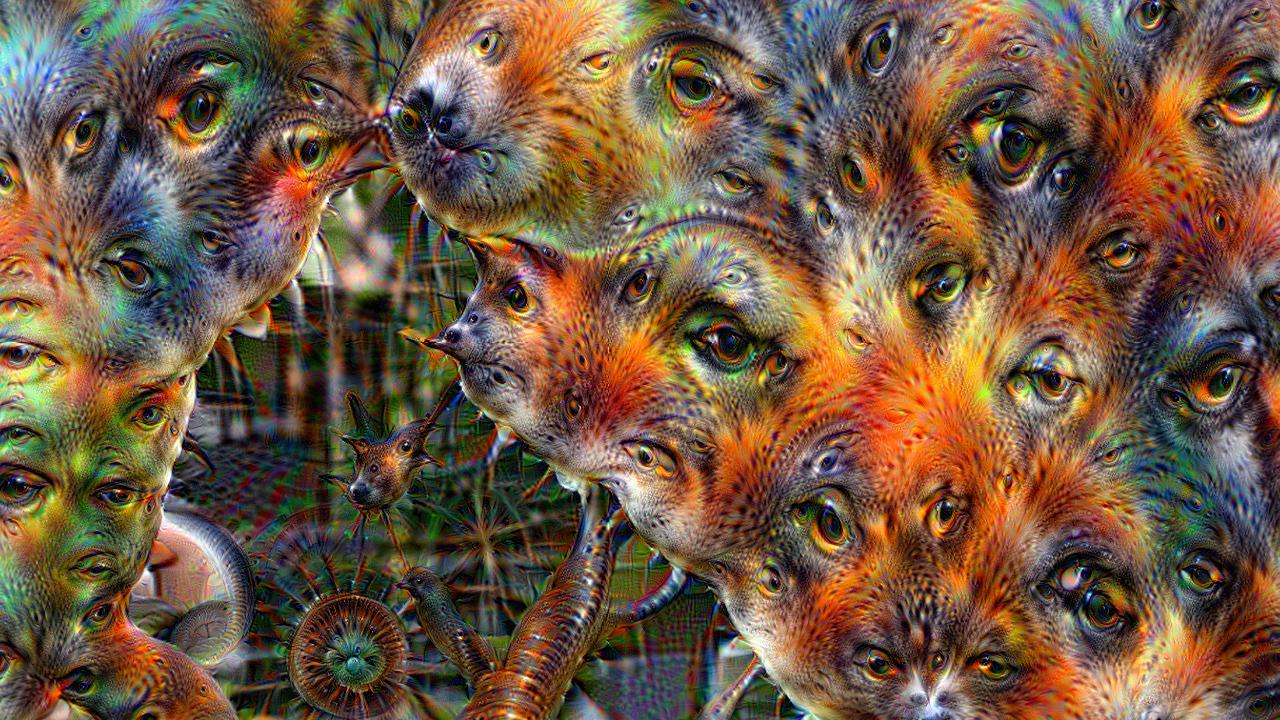

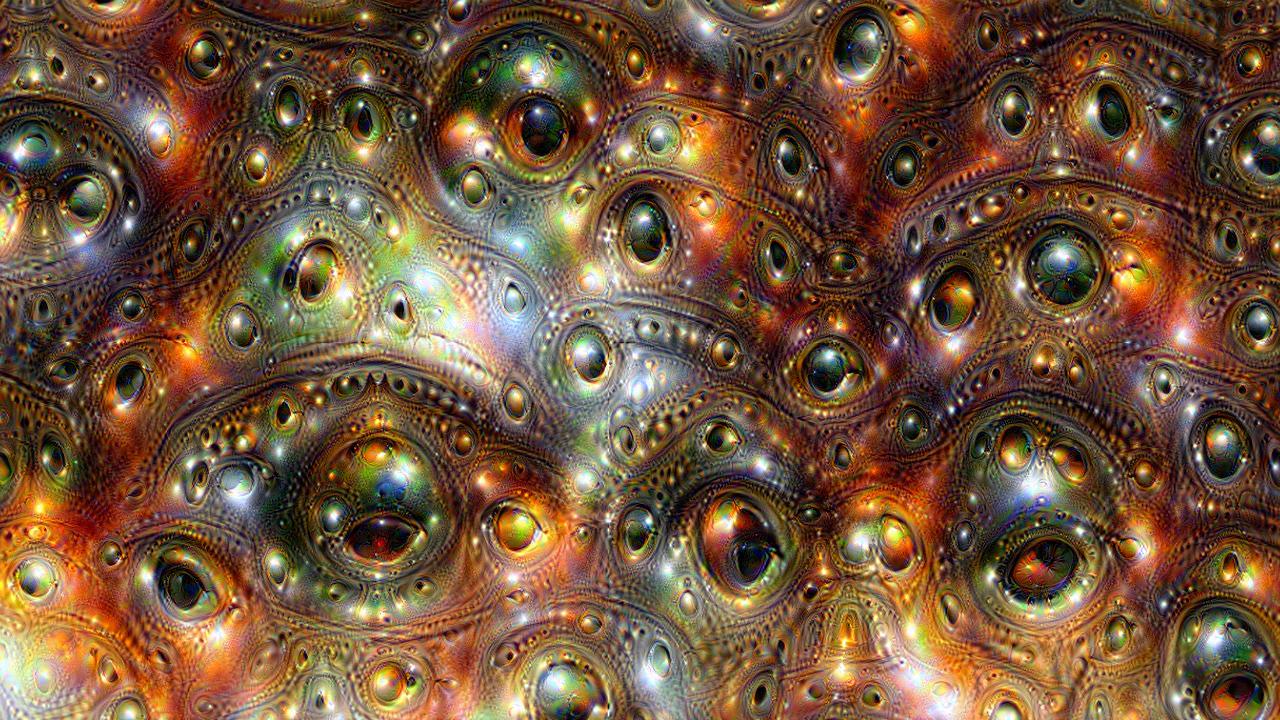

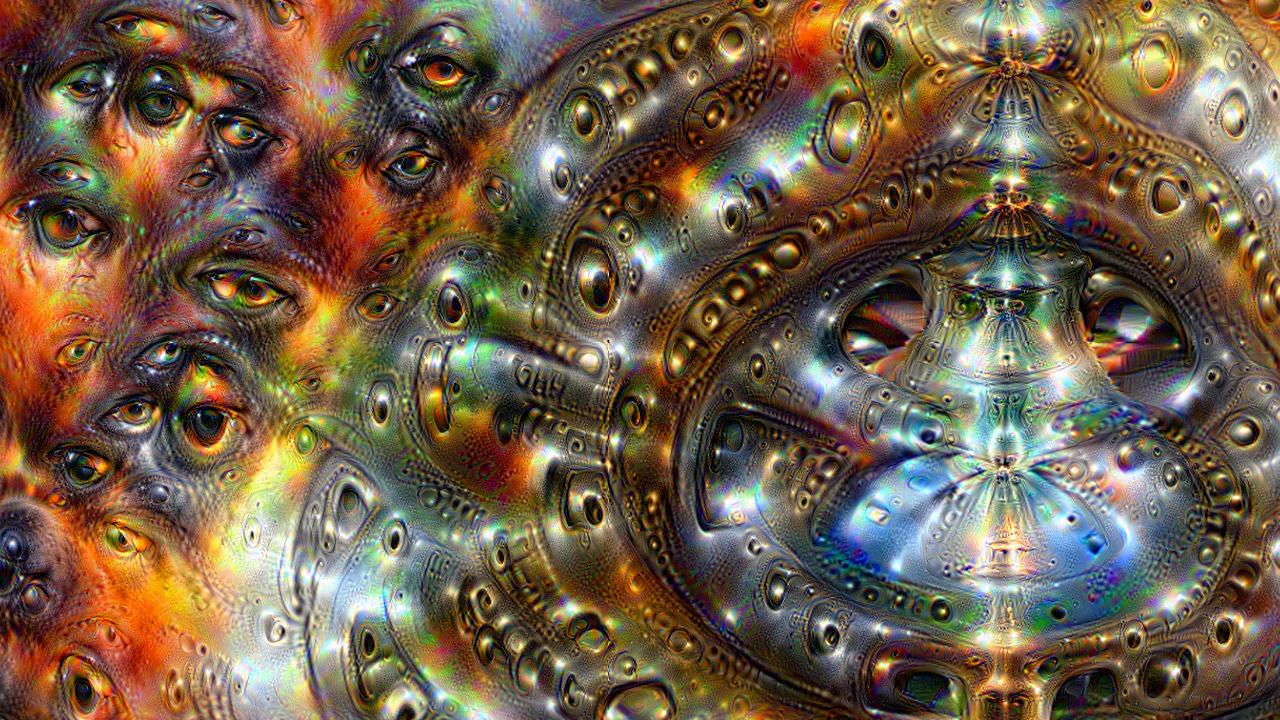

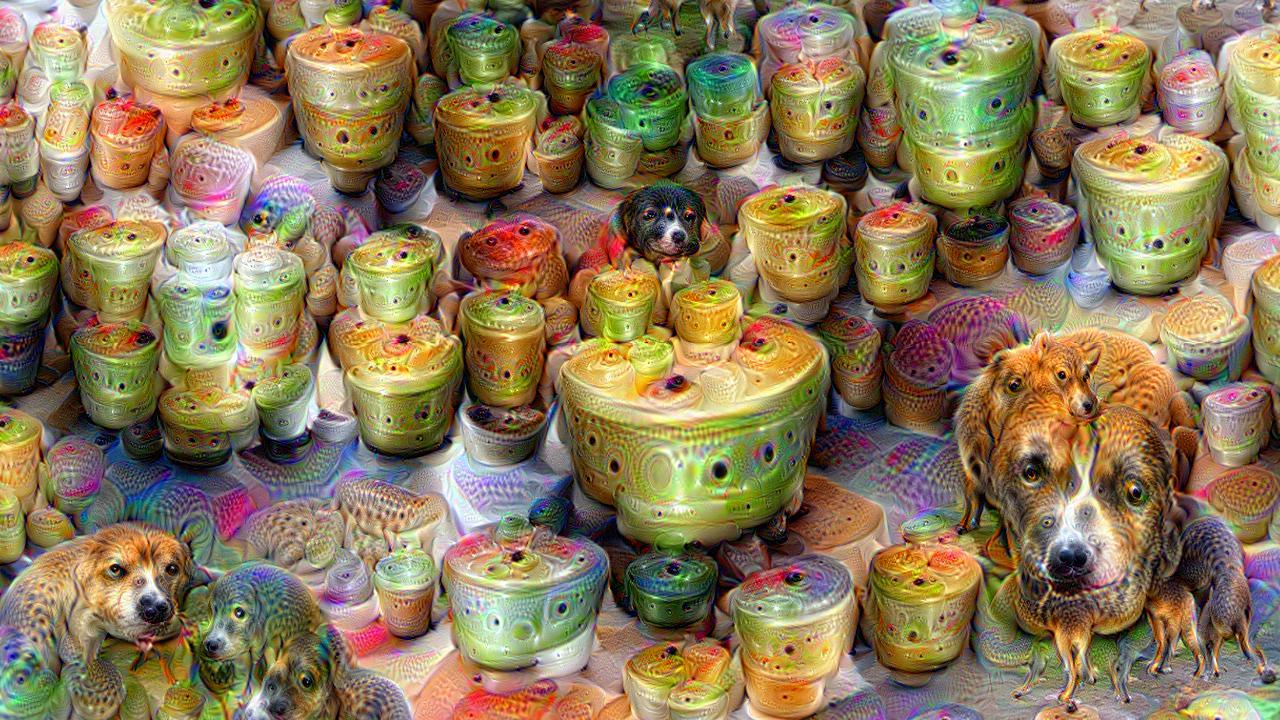

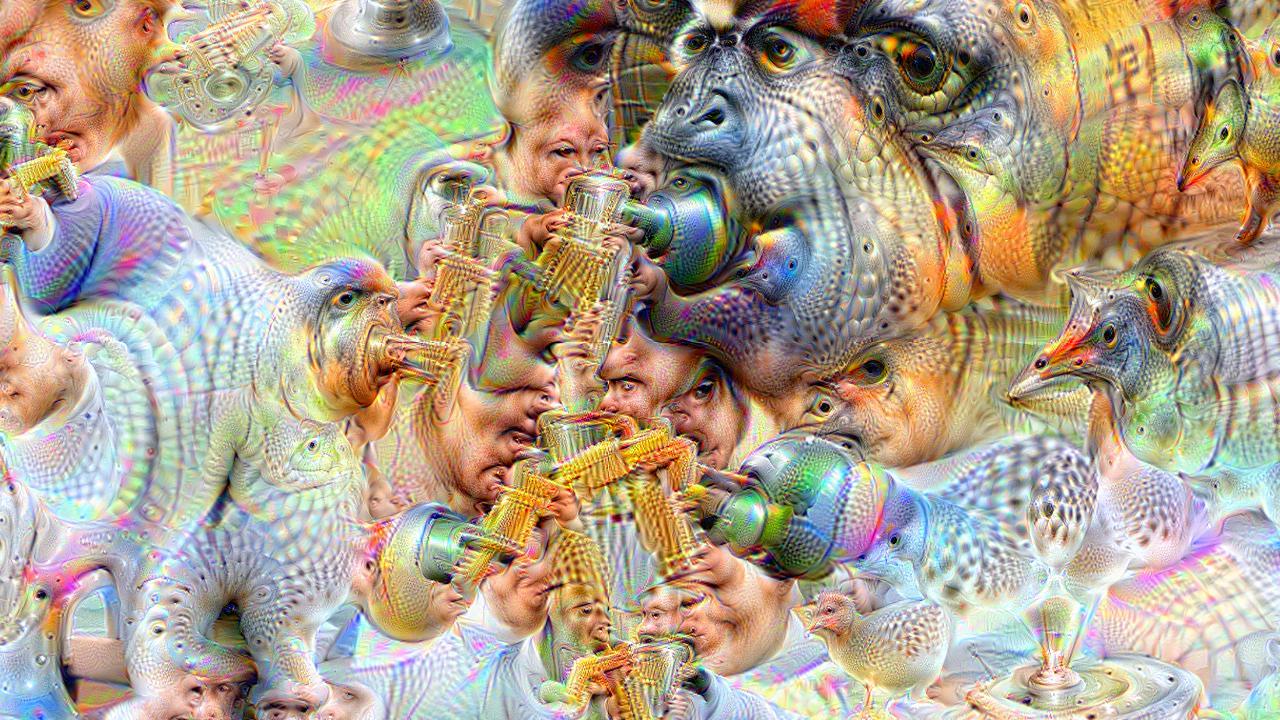

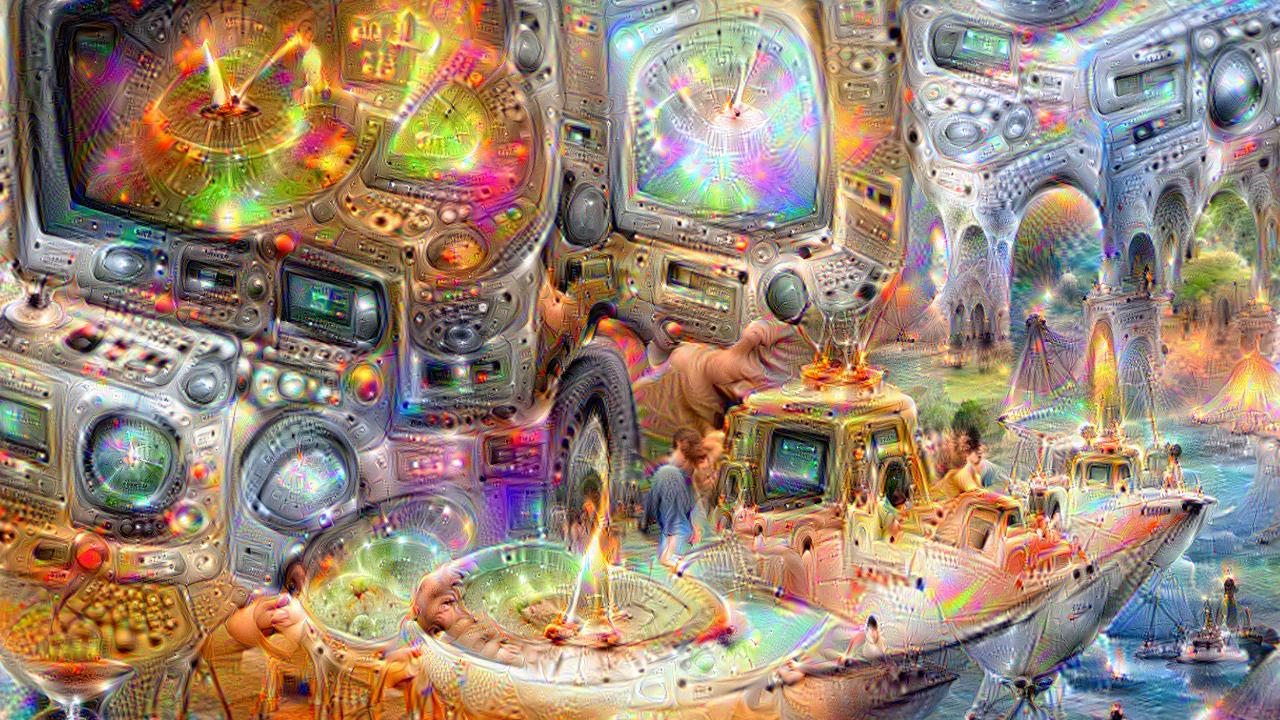

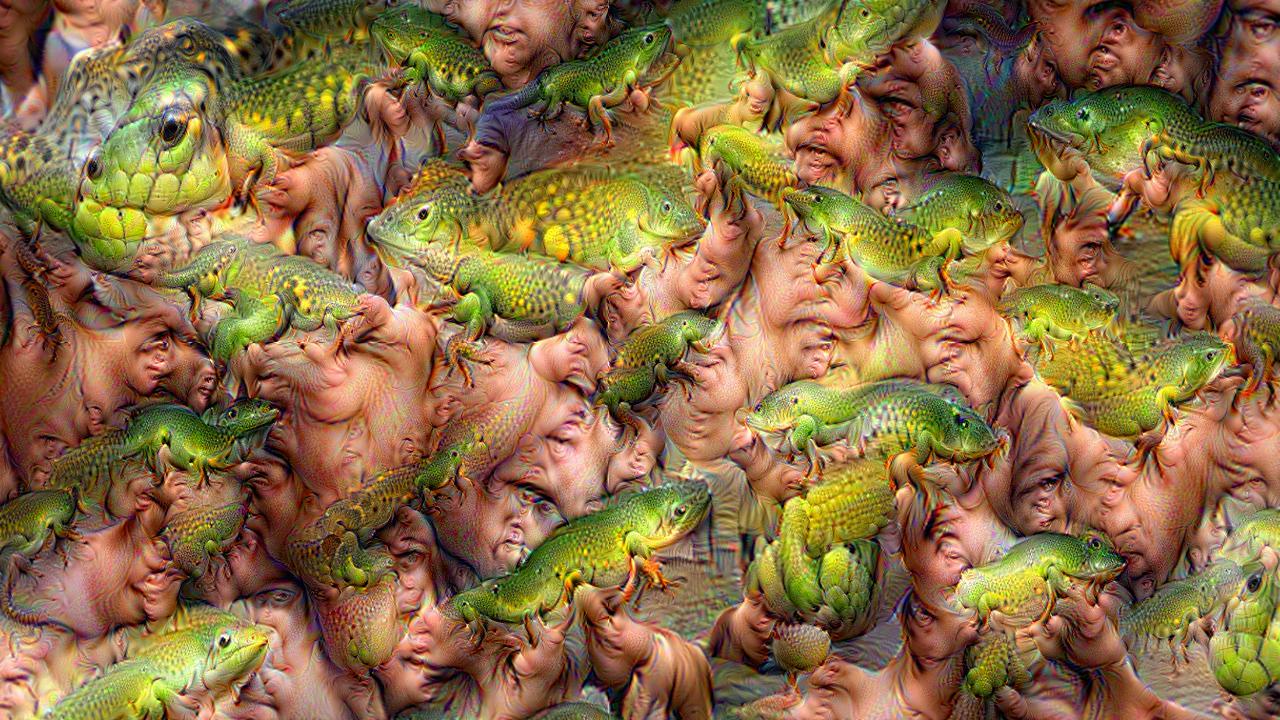

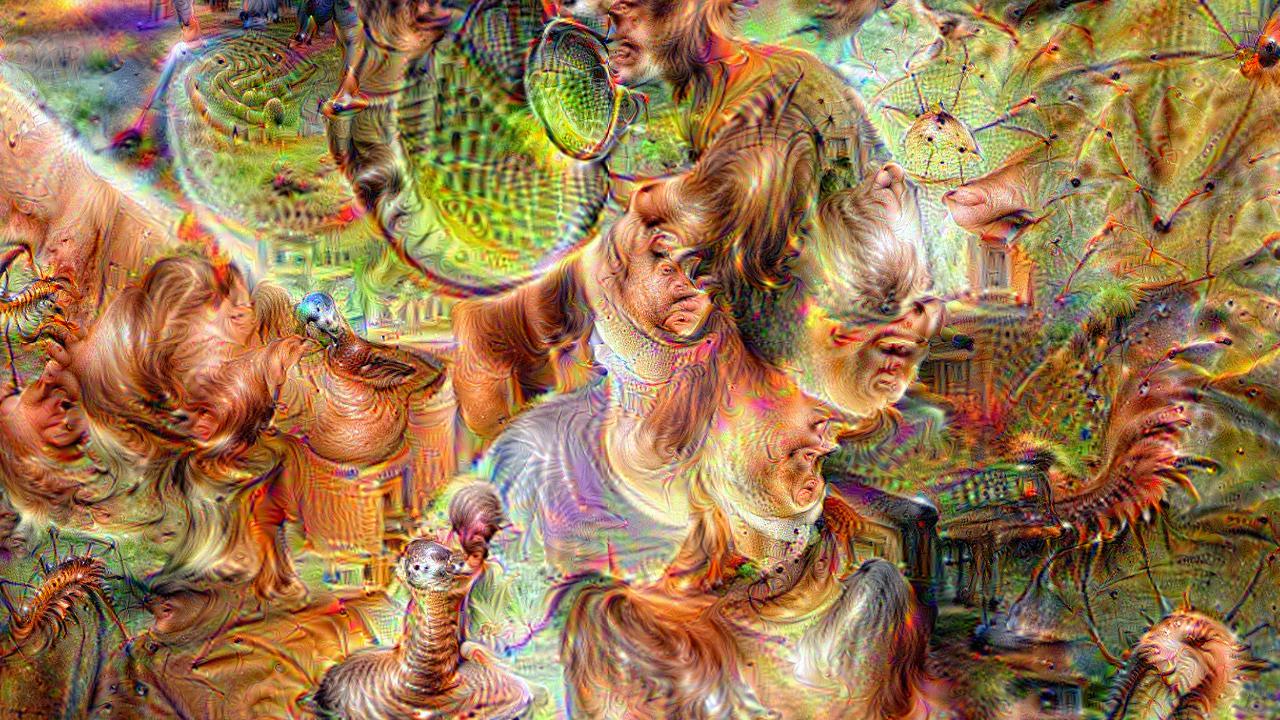

Figure : Portion of a frame created with Google Deep dream code

Deep dreams

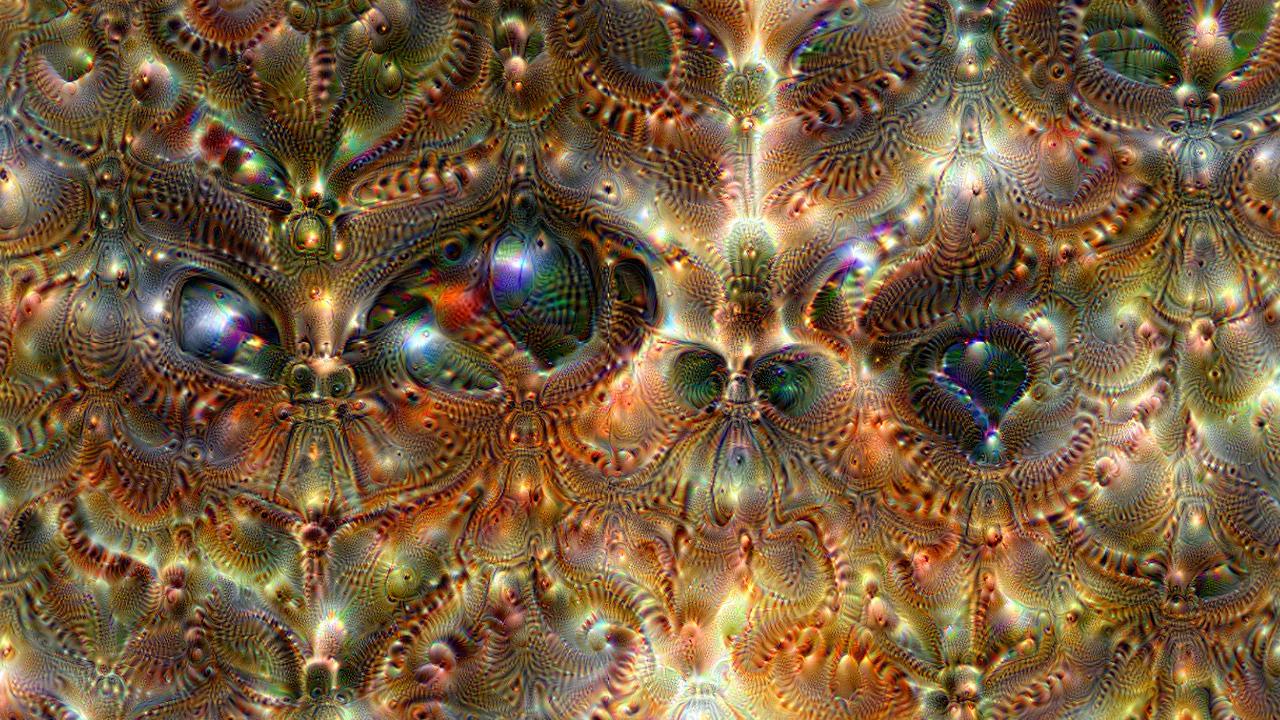

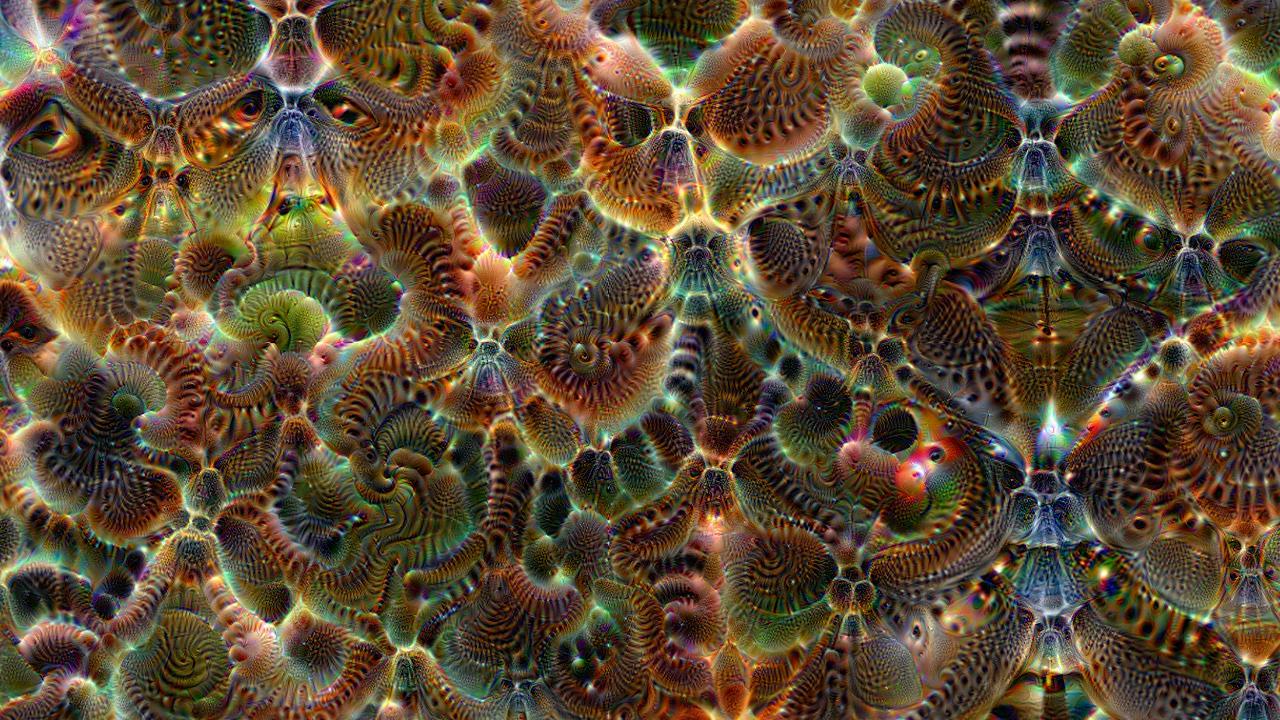

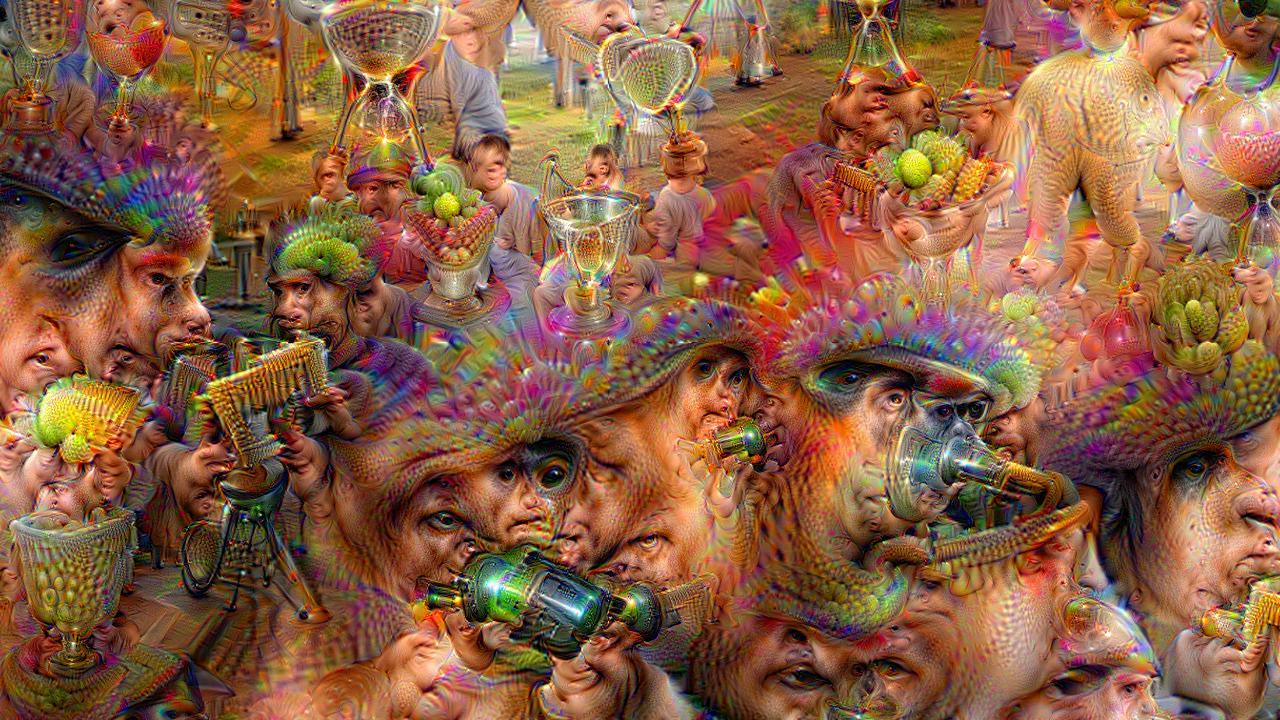

This section shows a video with all the layers of a deep nerual network and one selected frame per layer. The Convolutional Neural Network has been pretrained with ImageNet. The first input image is a picture of myself and at every step the image is zoomed with a ratio of 0.05. At every step the actual input image (frame) is forwarded to the actual hidden layer. The error is set to be the same representation in order to maximize all its activations. Then, a backward pass is computed to modify the input image. After 100 iterations of zooming in one layer, the next layer is used. The order of layers is the default in the Caffe prototxt code and can be seen in the following file:

The code that I used is basically the google/deepdream without the Ipython/Notebook parts and other parts from DeepDreamVideo. The code is available in perellonieto/DeepTrip.

To syncronize the music with the video and create an effect of slowmotion in certain frames I used the program SlowmoVideo freely available.

[0]Initial input data

[1]conv1/7x7_s2

[2]pool1/3x3_s2

[3]pool1/norm1

[4]conv2/3x3_reduce

[5]conv2/3x3

[6]conv2/norm2

[7]pool2/3x3_s2

[12]inception_3a/1x1

[13]inception_3a/3x3_reduce

[14]inception_3a/3x3

[15]inception_3a/5x5_reduce

[16]inception_3a/5x5

[17]inception_3a/pool

[18]inception_3a/pool_proj

[19]inception_3a/output

[24]inception_3b/1x1

[25]inception_3b/3x3_reduce

[26]inception_3b/3x3

[27]inception_3b/5x5_reduce

[28]inception_3b/5x5

[29]inception_3b/pool

[30]inception_3b/pool_proj

[31]inception_3b/output

[32]pool3/3x3_s2

[37]inception_4a/1x1

[38]inception_4a/3x3_reduce

[39]inception_4a/3x3

[40]inception_4a/5x5_reduce

[41]inception_4a/5x5

[42]inception_4a/pool

[43]inception_4a/pool_proj

[44]inception_4a/output

[49]inception_4b/1x1

[50]inception_4b/3x3_reduce

[51]inception_4b/3x3

[52]inception_4b/5x5_reduce

[53]inception_4b/5x5

[54]inception_4b/pool

[55]inception_4b/pool_proj

[56]inception_4b/output

[61]inception_4c/1x1

[62]inception_4c/3x3_reduce

[63]inception_4c/3x3

[64]inception_4c/5x5_reduce

[65]inception_4c/5x5

[66]inception_4c/pool

[67]inception_4c/pool_proj

[68]inception_4c/output

[73]inception_4d/1x1

[74]inception_4d/3x3_reduce

[75]inception_4d/3x3

[76]inception_4d/5x5_reduce

[77]inception_4d/5x5

[78]inception_4d/pool

[79]inception_4d/pool_proj

[80]inception_4d/output

[85]inception_4e/1x1

[86]inception_4e/3x3_reduce

[87]inception_4e/3x3

[88]inception_4e/5x5_reduce

[89]inception_4e/5x5

[90]inception_4e/pool

[91]inception_4e/pool_proj

[92]inception_4e/output

[93]pool4/3x3_s2

[98]inception_5a/1x1

[99]inception_5a/3x3_reduce

[100]inception_5a/3x3

[101]inception_5a/5x5_reduce

[102]inception_5a/5x5

[103]inception_5a/pool

[104]inception_5a/pool_proj

[105]inception_5a/output

[110]inception_5b/1x1

[111]inception_5b/3x3_reduce

[112]inception_5b/3x3

[113]inception_5b/5x5_reduce

[114]inception_5b/5x5

[115]inception_5b/pool

[116]inception_5b/pool_proj

[117]inception_5b/output

[118]pool5/7x7_s1