Tapani Raiko

Deep Learning

Deep Learning

Deep learning is an area of machine learning research that concentrates on finding hierarchical representations of data, starting from observations towards more and more abstract representations. This page describes some of my contributions in the area - see Deep Learning and Bayesian modelling group page for more general information.

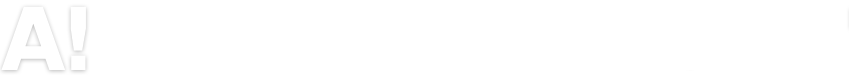

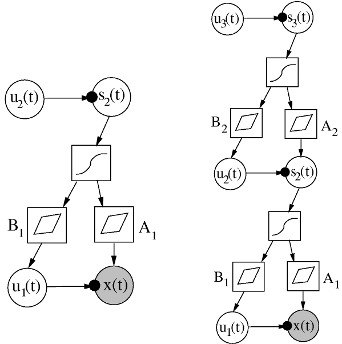

We presented some early work in that direction in (Valpola et al. 2001, Raiko et al. 2007) using Variational Bayesian learning in directed graphical models (see figure). Also, my masters thesis (Raiko 2001) was on layerwise training of hierarchical unsupervised models.

One building block of deep networks is the restricted Boltzmann machine (RBM), that is based on undirected connections between the visible and the hidden layer each consisting of a binary vector. Learning such models was known to be rather cumbersome, but we proposed several improvements to the learning algorithm in (Cho et al. 2013a, Cho et al. 2010, Cho et al. 2011a) that make the algorithm stable and robust against learning parameters. Gaussian-Bernoulli restricted Boltzmann machine (GRBM) is a version for continuous-valued data, and whose learning algorithm we improved in (Cho et al. 2011b), and extended to a deep version in (Cho et al. 2013b).

It was long thought that it is impossible to teach deep networks with the traditional back-propagation algorithm. In (Raiko et al. 2012, Vatanen et al. 2013), we proposed a simple reparameterization of the neural network learning problem by linearly transforming its nonlinearities. This makes basic stochastic gradient competitive with state-of-the-art optimization methods and allows learning of deep networks at least up to five hidden layers. This helps for instance when doing unsupervised pretraining less greedily by learning two layers at a time (Schulz et al. 2013).

While my work has concentrated on theory and algorithms, there is also something applied. In (Hao et al. 2012), we designed a model specifically for texture analysis. In (Keronen et al. 2013) we concentrated on noise-robust speech recognition.

Links:

I will be teaching in the Deep Learning summer school in Copenhagen, 24-28 Aug, 2015.My slides for the panel discussion on deep learning in ICONIP 2013

Research of our group Deep Learning and Bayesian Modeling

Deep Learning portal