Next: Conjugate gradient methods and

Up: Natural and conjugate gradient

Previous: Natural and conjugate gradient

The natural gradient learning algorithm is analogous to conventional

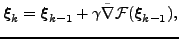

gradient ascent algorithm and is given by the iteration

|

(12) |

where the step size  can either be adjusted adaptively during

learning [9] or computed for each iteration using e.g. line search. In general, the performance of natural

gradient learning is superior to conventional gradient learning when the

problem space is Riemannian; see [9].

can either be adjusted adaptively during

learning [9] or computed for each iteration using e.g. line search. In general, the performance of natural

gradient learning is superior to conventional gradient learning when the

problem space is Riemannian; see [9].

Tapani Raiko

2007-09-11