![\includegraphics[width=0.3\textwidth]{prm_wines.eps}](img326.png)

![\includegraphics[width=0.5\textwidth]{blp_wines.eps}](img327.png)

|

Chapters 3 and 4 study machine learning from data containing discrete and continuous values. Statistical relational learning or probabilistic logic learning adds another element: References are used to describe relationships between objects. For example, the contents of a web page can be described by a number of attributes, but the links between pages are important as well. Taskar et al. (2002) show that using relational information in classification of web pages makes the task much easier.

First-order logic is one way of handling references (or relations). Statistical relational learning can also be seen as an extension of inductive logic programming (ILP) described in Chapter 5. The motivation of upgrading ILP to incorporate probabilities is that the data often contain noise or errors, which calls for a probabilistic approach.

There is a large body of work concerning statistical relational learning in many different frameworks. See (De Raedt and Kersting, 2003) for an overview and references. Two of these frameworks will be briefly reviewed in the following.

The formalism of probabilistic relational models (PRMs) by Koller (1999); Getoor et al. (2001) provides an elegant graphical representation of objects, attributes, and references (see Figure 6.1). Perhaps its close analogy with relational databases and object oriented programming has made the formalism quite popular. A PRM consists of two parts: the relational schema for the domain and the probabilistic component. Given a database, the relational schema defines a structure for a Bayesian network over the attributes in the data. The probabilistic component describes dependencies among attributes, both within the same object and between attributes of related objects. The conceptual simplicity comes with a cost in generality. For instance probabilistic dependencies between links are harder to represent and require special treatment (Getoor et al., 2002). PRMs have been applied for instance to gene expression data by Segal et al. (2001).

![\includegraphics[width=0.3\textwidth]{prm_wines.eps}](img326.png)

![\includegraphics[width=0.5\textwidth]{blp_wines.eps}](img327.png)

|

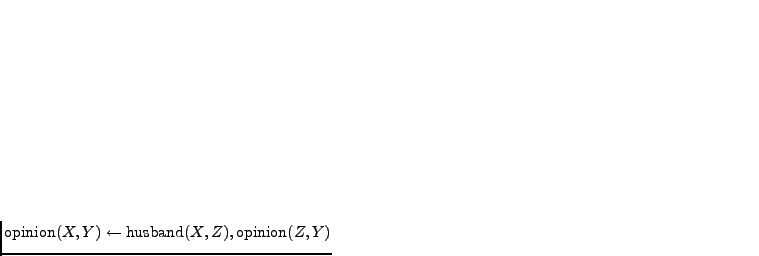

Kersting and De Raedt (2001,2006) introduced the framework of Bayesian logic programmes (BLPs). BLPs generalise Bayesian networks, logic programming, and probabilistic relational models. Each atom has a random variable associated to it. For each clause, there is the conditional distribution of the head given the body. The proofs of all statements form a (possibly infinite) Bayesian network where atoms are nodes and the head atom of each clause is a child node of the nodes in the body of the clause. Again, given the logical part of the data, a BLP forms a Bayesian network over the attributes of the data. Bayesian networks must be acyclic so the same applies to both PRMs and BLPs.

Let us continue the wine tasting example in Chapter 5 and

discuss it from a BLP point of view. Instead of

atoms we use

atoms we use

and associate a variable

(attribute) to the atom that actually tells what the opinion is. Then

the rule

and associate a variable

(attribute) to the atom that actually tells what the opinion is. Then

the rule

means that the

opinion on wine

means that the

opinion on wine  depends on the same person's opinion on wine

depends on the same person's opinion on wine

. The rule

. The rule

tells that the opinion about a wine depends on the wife's opinion on

the same wine. The actual probabilistic dependencies are placed in

conditional probability distributions associated to each rule. Note

that whereas a rule in ILP can only make the atom

tells that the opinion about a wine depends on the wife's opinion on

the same wine. The actual probabilistic dependencies are placed in

conditional probability distributions associated to each rule. Note

that whereas a rule in ILP can only make the atom

true but never false, a rule in BLP can

change the opinion to good or bad.

true but never false, a rule in BLP can

change the opinion to good or bad.